Martijho-PathNet-Experiments

From Robin

Contents |

Evolved paths through a Modular Super Neural Network

Research question

How would different evolutionary algorithms influence outcomes in training a PathNet structure on multiple tasks? What evolutionary strategies make the most sense in the scheme of training an SNN? Can evolutionary strategies easily be implemented as a search technique for a Pathnet structure? What would happen if the search algorithm is adapted during multitask learning (From high exploration to high exploitation or vice versa)?

Hypothesis

- OLD THOUGHTS

- "Different evolutionary algorithms would probably not change the PathNet results significantly for a limited number of tasks but might prove fruitful for a search for an optimal path in a saturated PathNet. Here, the search domain consists of pre-trained modules, hopefully with a memetic separation for each layer/module. This would ensure good transferability between tasks, and in the end, simplify the search and training of the task-specific softmax layer given the new task."

In a multi task system, the algorithm used for search (and by necessity, training) will influence modularity of the knowledge in the modules (see results in first path search). High exloration early on causes each module to be part of multiple permutations of paths, which again cause a high modularity in training. We should see this effect in module reuse between tasks when an exploration heavy algorithm is used on early taks vs. when it is not.

Experiment

Data

I perform an experiment using two datasets: MNIST and SVHN (street view housing numbers). The datasets make up 6 tasks:

- Task 1: MNIST Quinary classification of classes [0, 1, 2, 3, 4]

- Task 2: MNIST Quinary classification of classes [5, 6, 7, 8, 9]

- Task 3: MNIST full classification of all classes

- Task 4: SVHN* Quinary classification of classes [0, 1, 2, 3, 4]

- Task 5: SVHN* Quinary classification of classes [5, 6, 7, 8, 9]

- Task 6: SVHN* full classification of all classes

We can add noise to any of these tasks to create even more subtasks if necessary. The astrix next to SVHN means the dataset used is a simplified version of the cropped SVHN set. This is used because of its similarities with MNIST. To bridge the gap further, the MNIST data used here is padded with zeros from 28x28x1 matrices to 32x32x3 matrices where the single channel in the original data is repeated to create "rgb". As a possible ultimate test, we could use the original SVHN set to classify the whole housing number (multiple digits), but this introduces problems such as different image resolution.

Implementation

In each experimental run, the set of tasks is trained using a given search algorithm where each set of algorithms gets a couple of experimental runs. The different search algorithms is tournament selection with different selection pressure, implemented by changing the tournament size. The different algorithm sets is divided in 3 groups:

- Changing selection pressure

- There is two versions of this experiment, but in both the selection pressure is changed between tasks. For Low-to-High the tournament size increases through the sizes [2, 5, 10, 15, 20, 25] for the tasks [1, 2, 3, 4, 5, 6]. For High-to-low, the tournament size sequence is reversed so that the selection pressure is decreased for each additional task we teach the PathNet.

- Locked selection pressure

- In this group the two versions have one tournament size each which stay the same for all tasks. The sizes explored here is each of the extreme sizes 2 and 25.

- Tournament with recombination

- Here we reduce the selection pressure by using tournament size 3, but not replacing all paths in the tournament with the winner as in traditional tournament selection. Instead the two strongest paths recombine, the offspring mutates and replace the looser of the tournament. Each parent survives to the next generation. This way, convergence-rate is reduced and the exploration is even higher.

In stead of limiting the path search to a threshold accuracy (or a maximum generations if stuck), the search is done for 100 generations. Because of this, some analytics of convergence rate is introduced into the experiment.A simplified simulation of the search algorithms is performed where a set of normally distributed numbers are exposed to different selection pressures. The search is run for 500 generations, where the percentage of the total population containing the maximum of the set is plotted for each tournament size. This comparison of the tournament sizes and their convergence rates under simulation conditions is compared with the convergence rates during experimentation. This convergence metric is calculated as an average euclidean distance within a population. Each layer of each path is encoded as a sparse matrix where 1 is an active module and 0 a passive one. For each layer, each paths euclidean distance to the average encoding vector in L-dimensional space is averaged over the population. In the end, a scalar number is reached for each layer, where this number will approach zero when the population converges. It is not expected that this metric will reach zeros (all paths are exactly the same) due to the mutation applied to paths before the replace loosing paths during the search.

Also calculated is the convergence metric (diversity factor) for a random population of 64 in a PathNet with parameters [L=3, M=20, max_active_modules=3]. Diversity metric is the average euclidean distance to the centroid genotype in each generation for each layer. To reach the diversity metric for a whole path, these distances are "added together"/"averaged"(????). For a random initialization of paths, a populations diversity score is 1.308 for each layer.

Results

Plots

Search for the first path?

Research question

Is there anything to gain from performing a proper search for the first path versus just picking a random path and training the weights? In a two-task system, whats the difference between picking a first path and a PNN?

Hypothesis

I think performance will have the same asymptote, but it will be reached in fewer training iterations. The only thing that might be influenced by this path selection is that the modules in PathNet might have more interconnected dependencies. Maybe the layers are more "independent" when the weights are updated as part of multiple paths? This might be important for transferability when learning future tasks.

Suggested experiment

Performing multiple small multi-task learning scenarios. Two tasks should be enough, but it is necessary to show that modules are reused in each scenario. Test both picking a path and the full-on search for a path and compare convergence time for the second task.

Run multiple executions of a first-task search for a path in a binary mnist classification problem up to 99% classification accuracy on a test-set (like original pathnet paper). Log the training-counter for each optimal path and take a look at the average number of training iterations each path has. (so far: around 12?)

12 x 50 = 600 => 600 backpropagations of batchsize 16 or => 9600 training-examples shown

Then run multiple iterations where random paths of same average size as in the original experiment is trained for 600 iterations. compare classification accuracy of each path

- Metrics

- Training counter for each module and average of each path

- Path size (number of modules in path) connected to capacity?

- Reuse of modules (transferability)

Implemented experiment

Problem: Binary MNIST classification (Same as deepminds experiments without the salt/pepper noise) 500 Search + Search

- Search for path 1 and evaluate optimal path found

- Search for path 2 and evaluate optimal path found

- Each found path is evaluated on test set

- For each path, save: the path itself, its evaluated fitness, number of generations used to reach it, the average training each module received within the path (1=50*minibatch),

- Also store the number of reused modules from task 1 to task 2

- Generate path1 and path2s training-plot and write it to pdf

500 Pick + Search:

- Generate a random path

- Train same number of times as average training time for first path from "search + search" with same iteration index

- evaluate random path on test set

- Search for path 2 and evaluate on optimal path found

- Store same metrics as in search + search

- Generate path1 and path2s training-plot and write it to pdf

Last, write log to file

Results

Note that all experiments in this section have been limited to a total of 500 generations in its search. This is to stop those models which get stuck in a local minima from halting the experimental run indefinetly.

- Binary MNIST classification:

- Iterations: 600

- Population size: 64

- Acc threshold: 98%

- Tasks: [3, 4] then [1, 2]

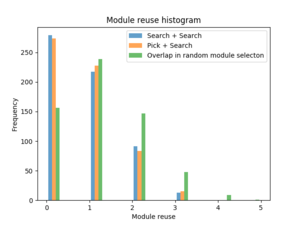

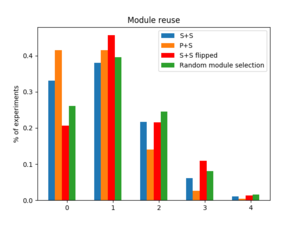

As we can see from the first plot, there is no indication that there is a significant difference in module reuse between the search+search and pick+search training schemes. When comparing the results with a random selection of modules (green bar) it is apparent that for these tasks, the PathNet prefer to train new modules for each task, rather than reuse knowledge in pre-trained modules. This differs from our hypothesis that end-to-end training causes confounded interfaces between layers, but we could argue that these results are caused by too little training, too simple training data or too much available capacity. This would cause the distance in parameter space between initialized parameters and "good enough" parameters to be rather small, so the gain from reusing modules is not large enough to justify the reuse.

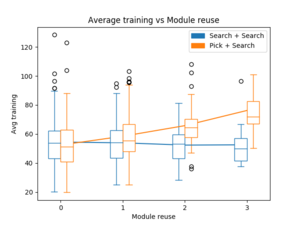

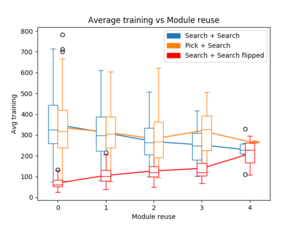

In the second plot, we see a trend that supports the claim that the training scenario used is too simple. For the pick+search results, the amount of module reuse increases with the average training for each path, while this seems to stay relatively constant for the search+search experiment. This could mean that in order to reach the classification accuracy threshold for the second task, after performing an end-to-end training for the first task, the paths need more training to understand the layer outputs for a higher amount of module reuse.

The last plot shows something unexpected. The results for search+search and pick+search indicate the same as plot number one, no significant difference in the module reuse. But here, the reuse is shown for each layer in the models. For the first layer, it is a significant reduction in the number of reused modules. This is the opposite of what we would expect based on the results in "How transferable are features in deep neural networks?"(Yosinski et al), where the first layers tended to be the most general and easily reusable. This is most likely a property of using MLPs and would disappear if the modules in question were replaced by convolutional modules. Fully connected NNs are poor at generalizing to image data because of images complex class manifolds.

- Initial reaction: This is a property of using MLPs and would disappear if the first layers in PathNet are replaced by convolutional modules.

- Fully connected NNs are poor at generalizing to image data since convolutional layers are invariant to scale, rotational and translation. Each neuron would have to generalize to its coresponding image pixel, and would therefore be highly task specific.

- Quinary MNIST classification

- Iterations: 535

- Population size: 64

- Acc threshold: 97.5%

- Tasks: Mnist [0, 1, 2 3, 4] then [5, 6, 7, 8, 9]

The experiment, in this case, follows the same execution structure as in binary MNIST, however, the PathNet structure used for this experiment consists of convolutional modules.

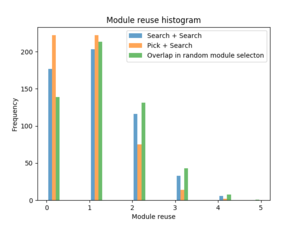

In "module reuse histogram" we see evidence of the original hypothesis to be correct. As the number of reuse increase, the likelihood of finding a model with that number of reuse goes down, but with the P+S training scheme, a higher number of reuse is less likely than with S+S. We still see the trend to rather train modules from scratch than reuse, but this effect is reduced for S+S and we suspect that for tasks with an even steeper learning curve, the frequency of 0 reuse for S+S would drop below that of the random module selection.

The second plot shows that the effect we saw for binary MNIST classification has been reduced. There could still be a divergence between S+S and P+S, however. It seems the amount of average training the second path receives goes down with the amount of reuse for S+S. If one task is much easier to learn than the first, the average amount of training for each path would be significantly different. If Task A is simpler and receives less training, a higher amount of reuse should cause a decline in average training amount since the second path reuses more modules with less training each. The opposite is true if task B is simplest. A higher level of reuse would then cause the second path to use modules from path 1 which have a higher amount of training. In the second plot, the decline in avg training for higher levels of reuse would then indicate that task A is simpler than task B.

- TEST BY FLIPPING TASK A AND B

- Quinary MNIST classification with flipped S+S

| thumb|300px|alt=reuse by layer|Amount of module reuse for each layer in s+s and p+s searches alongside amount of reuse when randomly selecting modules |

Other possible experiments

Gradual Learning in Super Neural Networks

Research question

Can the modularity of the SNN help show what level of transferability it is between modules used in the different tasks in the curriculum? How large is the reduction in training necessary to learn a new task when a saturated PathNet is provided compared to learning de novo?

Hypothesis

By testing what modules are used in which optimal paths, this study might show a reuse of some modules in multiple tasks, which would indicate the value of curriculum design. A high level of reuse might even point towards the possibility of one-shot learning in a saturated SNN

Suggested Experiment

Training an RL agent on some simple toy-environment like the LunarLander from OpenAI gym. This requires some rework of the reward signal from the environment to fake rewards for subtasks in the curriculum. Rewards in early subtasks might be clear-cut values (1 if reached sub-goal, 0 if fail)

- Read up on curriculum design techniques

Create then a sequence of sub-tasks gradually increasing in complexity, and search for an optimal path through the PathNet for each of the sub-tasks. This implementation would use some version of Temporal Difference learning (Q-learning), and each path would represent some approximation of a value function.

Capacity Increase

Research question

Can we estimate the decline in needed capacity for each new sub-task learned from the curriculum? How "much" capacity is needed to learn a new meme?

Hypothesis

Previous studies show a decline in needed capacity for each new sub-task (cite: Progressive Neural Networks-paper). If a metric can be defined for measuring the capacity change, we expect the results to confirm this.